The real danger is not AI, but the “media hype” that accompanies it

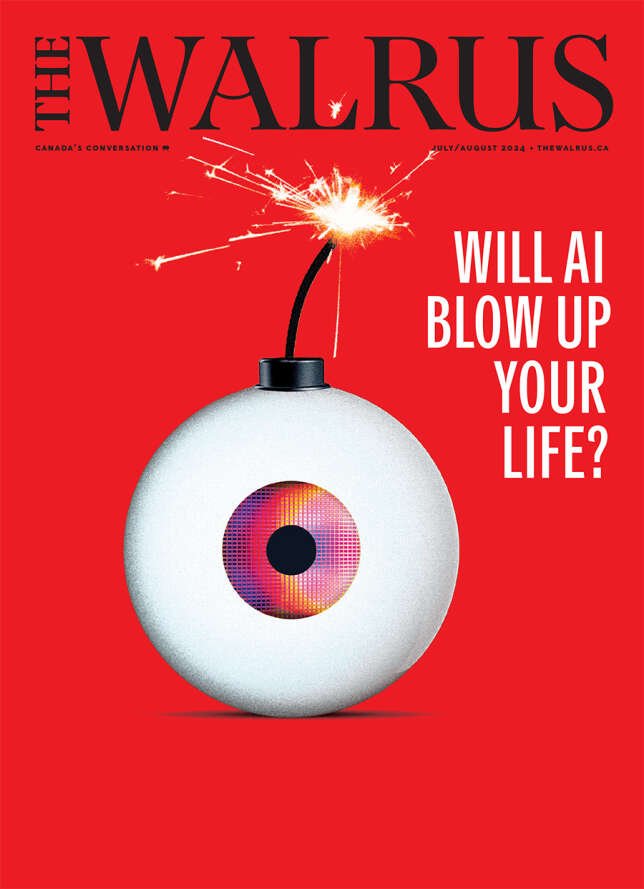

The spark is bright, the fuse almost melted, the bomb ready to explode. This bomb is in fact an eye, with an unusual iris, a mixture of very pixelated purple and yellow, almost artificial.

This superhuman eye about to burst is the metaphor chosen by the Canadian magazine The Walrus to evoke the threat of artificial intelligence, but above all “all the mystification around this technology”.

“The real threat from superintelligences is being fooled by the media hype that surrounds them,” believes journalist Navneet Alang, who writes the front page.

The search for a new god

Since the arrival of ChatGPT in our lives, panic is everywhere. There is writers who fear becoming obsoleteGovernments who try to regulate AIand the academics in confusion.

On another side, “companies have jumped on the craze train”, like Microsoft and Meta, which spend billions. Moreover, financing AI start-ups “reached nearly 50 billion US dollars in 2023”, precise The Walrus.

This enthusiasm is based in part on AI’s ability to process millions of factors at once, an ability that far exceeds “that of humans”, recognizes the Toronto magazine.

However, the main tasks of AI are “confined to actions that we already carry out on a daily basis”, like sorting emails. So, if AI takes up so much space, estimates The Walrus, is that we have become obsessed with the fear of losing control of“an intelligence that is millions of steps ahead of us”.

But again, if computers “can approach what we call thought”, they “do not dream, do not want, do not desire”, recalls the magazine.

Finally, to compare our respective strengths, “we are far from being inferior to AI”. In reality, “the gap that we perceive is mainly linked to our ignorance of their real functioning”.

Seek to “Using AI to tackle structural problems, like climate change, or growing anti-immigration sentiment, is an illusion,” hammers The Walrus, who thinks what will hinder progress on these issues “will ultimately be what holds everything back: us.”

AI works anyway from the biases that already exist in our societies, to the extent that learning models absorb “masses of data based on what is and what has been”, including misogynistic representations, racist and discriminatory in general.

“If you find yourself asking AI about the meaning of life, it’s not the answer that’s wrong: it’s the question,” writes Navneet Alang, who believes that the only question that should be bothering us is “know why we need a ‘digital god’ so much”. Would turning to AI to make sense of the world already be proof that it no longer has any?